Introduction

Recently a client had quite an issue with DirectAccess. I won't go into the specifics but I will say that, in addition to a number of remediation scripts that we needed to run using Intune - we did need to contact a significant number of users directly. In these cases the user's device did have an issue contacting any of the on premise domain controllers. And because of this, some web based applications could not be accessed.

And we did need to initiate a remote viewing session with each user, either using QuickAssist or Teams. With QuickAssist we would normally be able to execute commands or scripts with elevated privileges. But in these cases this was not possible because no domain controller could be contacted to complete a Windows authorisation. And in Teams we do have a remote sharing feature and also with the ability to take control of the user's desktop when required - but Teams is a collaboration application and not a remote troubleshooting tool - and there is not, as far as I know, any way of taking control with elevated privileges.

And so I thought the Live Response feature in the Microsoft 365 Defender cloud based solution might be the ticket. And so it was. You could almost say it was tailor made for our particular problem.

We had to achieve four things when we contacted each user:

1) Check child registry keys were cleared under HKLM:\Software\Policies\Microsoft\Windows NT\DNSClient\DnsPolicyConfig

2) Check the hosts file in c:\windows\system32\drivers\etc contained a specific IP address and URL

3) Run a PowerShell script if any of the above checks failed

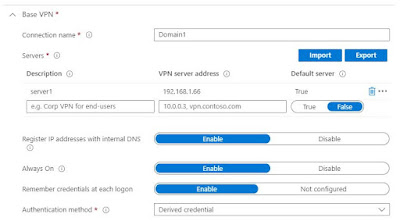

4) Connect via a backup VPN solution and run gpupdate.

Using Teams or QuickAssist we could perform items 1 and 2 but not item 3. And in any case often times the session would be slow and cumbersome. Possibly we could guide the user to completing step 3 if they had local admin rights - and as a rule this was not the case.

And so the three R's of Live Response came to our rescue - Registry, Retrieve and Run. Live response is a Remote Shell connection, giving us administrators a set of powerful commands for troubleshooting devices with elevated privileges. And such troubleshooting requires no demands of the user - and if they can do some of their required work, issue permitting, then they can do so while the security administrator uses Live Response to troubleshoot and resolve the issue. It sounds cool and it is cool, as we shall see.

Note: This article will not cover step 4 - connect via a backup VPN solution and run gpupdate

Allowing Live Response

We permit the use of Live Response in the Microsoft 365 Defender portal by navigating to Settings\Endpoints\Advanced features. Turn on Allow users with appropriate RBAC permissions to investigate devices that they are authorized to access, using a remote shell connection.

In addition, if you need to run unsigned PowerShell scripts, turn on Live Response unsigned script execution.

Using Live Response

A Live Response remote shell is started by navigating, in the Defender Portal, to Devices. In the Device Inventory blade you can type the name of the troubled device in the Search box

Click on the returned device and then click on the 3 ellipse points on the right hand side. Select Initiate Live Response Session from the drop down list.

Your command console sessions is then established.

Registry

The first R of our list of requirements, as stated above, is to check a particular registry key in the HKLM hive. We do this by running the registry command with the path to the key.

registry "HKEY_LOCAL_MACHINE\Software\Policies\Microsoft\Windows NT\DNSClient\DNSPolicyConfig"

After a few seconds the results are returned.

There are clearly many child keys under the DnsPolicyConfig key, and so this particular device does not pass the first of our two checks.

Retrieve

The second check, as detailed above, was to examine the hosts file. For this we have the getfile command:

getfile c:\Windows\system32\drivers\etc\hosts

We press enter and after a few seconds the hosts file is copied to our Downloads directory for us to examine.

A quick examination confirmed this file to contain the required IP address and URL.

Run

In this particular example, one of our two checks failed - the hosts file checked OK but the DNSPolicyConfig key contained child keys that we need to remove - for the purpose of our DA issue.

To do this I need to do the following.

1) Upload my script, called cleanDNS.ps1 to the Live Response library.

2) Run the following command in the command shell: run cleanDNS.ps1

Uploading the Script

This is done by clicking on Upload file to library, which is located on the right hand side above the command shell.

The next steps are fairly intuitive. Click on Choose File and browse to and select the PowerShell script to run, I chose my CleanDNS.ps1 file.

The next step is to click on Confirm.

Note: The cleanDNS.ps1 script is very simple and contains the following command - Remove-Item -path "HKLM:\Software\Policies\Microsoft\Windows NT\DNSClient\DnsPolicyConfig\*" -recurse

Run cleanDNS.ps1

Now we can run our script using the run <name of script> command.

So we do type in run cleanDNS.ps1 and click on Enter. The script successfully executes.

Note: The name of the file is case sensitive.

We now have remediated the registry issue. For this real world example, the next step was to connect the device using the backup VPN solution and run a gpupdate, which would populate the DNSPolicyConfig key with the required child keys, properties and values. After a reboot the DA feature was once more working and the user could access their required internal web based applications,

I hope you enjoyed reading this article and I wish you as much success with your testing of the Live Response feature in Microsoft 365 Defender

Colin